I have 48 domain classes in my Grails 2.1 project and I use Grails Database Migration Plugin 1.2 for a database management. Recently I’ve noticed that it becomes terribly slow when running application, even if there are no changes to be applied.

I switched do debug logging level for liquibase package and I found that it takes about 15 seconds to parse changelog.groovy and 20 files that were included in it!

Prepare benchmark

I couldn’t belive it so I’ve created two new clean changelogs:

$ grails dbm-generate-changelog changelog.groovy $ grails dbm-generate-changelog changelog.xml

Both of these changelogs contain 229 change sets. It is enough that you can benchmark parsers for them. Two parsers in question are:

-

grails.plugin.databasemigration.GrailsChangeLogParser

-

liquibase.parser.core.xml.XMLChangeLogSAXParser

I need to modify a line in my Config.groovy and switch changelog.groovy with changelog.xml for a second test:

grails.plugin.databasemigration.updateOnStart = true grails.plugin.databasemigration.updateOnStartFileNames = ["changelog.groovy"] // grails.plugin.databasemigration.updateOnStartFileNames = ["changelog.xml"] grails.plugin.databasemigration.updateOnStart = true grails.plugin.databasemigration.updateOnStartFileNames = ["changelog-all.groovy"] // grails.plugin.databasemigration.updateOnStartFileNames = ["changelog-all.xml"]

Profile with JProfiler

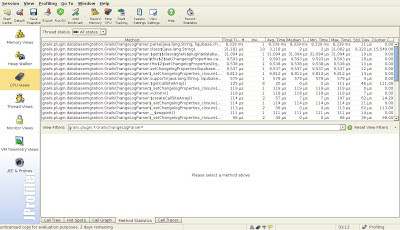

I want to profile execution time. I use JProfiler from ej-technologies to measure execution times. Please notice that I don’t want to benchmark SQL queries performed by liquibase. I am only focused on parse method of these two classes.

Here’s how I set up JProfiler:

I switch to CPU Views – Method statistics and I click “Record”. Here are results for both parsers:

Results for changelog.groovy

Results for changelog.xml

Analysis

My assumptions were correct: 8 339 ms vs 139 ms. Parsing XML is 60 times faster! I want to jump and sing: “I switch to XML now!”, but I have some concerns. I have a production database that I need to be compatible with. And I should rewrite my all groovy changelog files by hand. So it’s not so trivial and it’s a time consuming and error prone task.

So as much as I want to switch to XML now, I won’t. But if you start your adventure with database migration plugin today I have an advice for you: use XML if you start from scratch.

For now I’ve just submitted a new JIRA issue – GrailsChangeLogParser – parse method is very slow and I hope it can be greatly improved.